Table of Contents

Bayesian Optimization Packages

Online Tutorials

- scikit-optimize

- GPyOpt, based on GPy

http://rmcantin.github.io/bayesopt/html/bopttheory.html

- A very good introduction to BO, from BayesOpt's docs.

Online Discussions

Packages (as of 12/18/2020)

(Disclaimer) With the leaning “(Deprecated)” in the subtitles, I mean it looks haven't been updated or maintained for a long time. I didn't check or verify it. These are very subjective judgments to help me clear up the mind. You are welcomed to send me an email for any discussion.

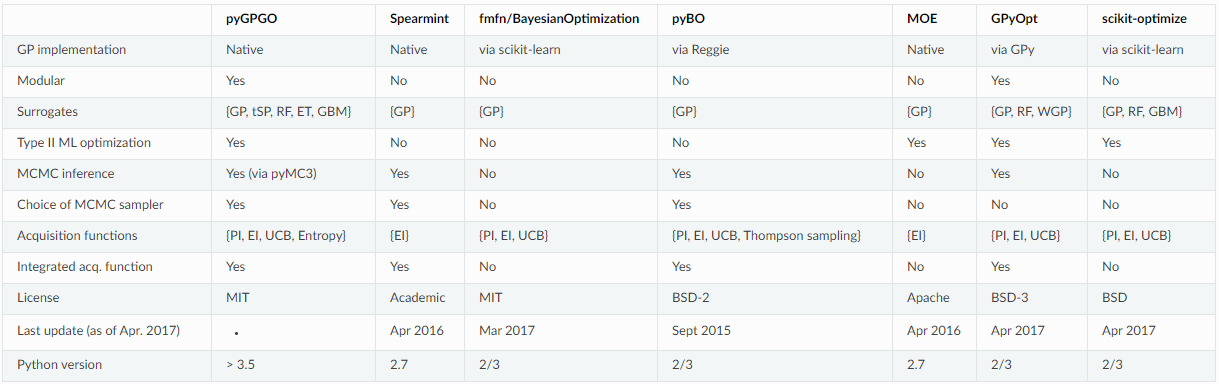

Comparison table

| Package | Language | Active? | Open-source | Version | Last Commit [note-1] | Surrogate | GP | Type-II ML optim. | MCMC | Acquisition Func. | Batch of points | Multi-Objective |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pyGPGO | python | low | yes | v0.4.0.dev1 (11/02/2017) | 11/23/2020 | GP, tSP, RF, GBM | Native | MML, | pyMC3 | PI, EI, UCB, Entropy | ||

| * scikit-optimize | python | yes | yes | v0.8.1 (09/04/2020) | 09/29/2020 | GP, RF, GBM | scikit-learn | MML, | No | PI, EI, UCB | ||

| BayesianOptimization (bayes_opt) | python | yes | yes | v1.2.0 (05/16/2020) | 07/13/2020 | GP | scikit-learn | No | No | PI, EI, UCB | ||

| * GPyOpt | python, GPy | ended | yes | v1.2.6 (03/19/2020) | 11/05/2020 | GPP, RF, WGP | GPy | MML, | Yes | PI, EI, UCB | ||

| Trieste | python, gpflow 2 | very | yes | v0.5.0 (06/10/2021) | 06/10/2021 | GPR, SGPR, SVGP | gpflow 2 | ? | No | EI, LCB, PF, | ||

| BayesOpt | C++; Python, MATLAB | yes | yes | 0.9 (08/28/2018) | 05/14/2020 | GP, STP | Native | MML, ML, MAP, etc. | No | EI, LCB, MI, PI, Aopt, etc. | ||

| Emukit (Amazon) | python, GPy | yes | yes | v0.4.7 (13/03/2019) | 11/30/2020 | GPy model wrapper | GPy | ? | No, almost | EI, LCB, PF, PI, etc. | ||

| Spearmint | python, MongoDB | ended | yes | |||||||||

| Whetlab (Twitter) ←-Spearmint | no? | NO | ||||||||||

| pyBO | python | ended | yes | v0.1 (09/18/2015) | 09/20/2015 | |||||||

| MOE (Yelp) | python | ended | ||||||||||

| SigOpt ←- MOE | NO | |||||||||||

| GPflowOpt | python, gpflow 1 | ended | ||||||||||

| BoTorch (Facebook) | python, GPyTorch | yes, very | v0.3.3 (12/08/2020) | (12/18/2020) | GPyTorch model wrapper | GPyTorch | Yes | EI, UCB, PI, | yes |

Notes about the table

Not finished yet. Missing a lot of things.

go over MLE, MLL, MAP, …

[note-1] As of the last time I update this data.

What does “Type II ML optimization” mean in the original table from pyGPGO?

It should mean how the hyperparameters of the surrogate model is trained. “Type II ML optimization” indicates an empirical Bayes capability, while a full Bayes approach requires MCMC generally.

What does “Integrated acq. function” mean in the original table?

It is an “integrated version” of the acquisition function used in MCMC.  I still don't understand what “integrated” means.

I still don't understand what “integrated” means.

(Deprecated) GPyOpt (Python, GPy) (Sheffield)

https://github.com/SheffieldML/GPyOpt

- Docs. Mostly API descriptions and not very helpful for beginners.

-

- Some capabilities I'm interested in for now

- 5.1. Bayesian optimization with arbitrary restrictions

- 5.5 Tuning scikit-learn models

- 5.6 Integrating the model hyper parameters

- 5.8 Using various cost evaluation functions (what's this???)

- 5.10 External objective evaluation

- 6.1 Implemeting new models

- 6.2 Implementing new acquisitions

- 虽然停止更新了,但是看起来相当完善,而且是基于纯python的。考虑使用

BayesianOptimization, bayes_opt (Python)

https://github.com/fmfn/BayesianOptimization

- Sequential Domain Reduction

- the bounds of the problem can be panned and zoomed dynamically in an attempt to improve convergence. (这个功能很有趣,在别处没有看到)(有可能适用于我需要研究不同区域内的最优化?可变区域,后续关注一下

)

) - An example tutorial

- Similar to

scikit-optimize - No documents

- In its advanced tour tutorial

- There is no principled way of dealing with discrete parameters using this package.

- GP kernels and parameter tuning are not supported.

- 和scikit-optimize很相似,不支持GP的超参数优化;暂时不考虑

pyGPGO (python; scikit-learn, pyMC3, theano)

https://github.com/josejimenezluna/pyGPGO (Python)

- latest release: 11/02/2017

- Features (official):

- Different surrogate models: Gaussian Processes, Student-t Processes, Random Forests, Gradient Boosting Machines.

- Type II Maximum-Likelihood of covariance function hyperparameters.

- MCMC sampling for full-Bayesian inference of hyperparameters (via pyMC3).

- Integrated acquisition functions

-

- Acquisition function (also available for the MCMC sampling versions of surrogate models.)

- Probability of improvement: chooses the next point according to the probability of improvement w.r.t. the best observed value.

- Expected improvement: similar to probability of improvement, also weighes the probability by the amount improved. Naturally balances exploration and exploitation and is by far the most used acquisition function in the literature.

- Upper confidence limit: Features a beta parameter to explicitly control the balance of exploration vs exploitation. Higher beta values would higher levels of exploration.

- Entropy: Information-theory based acquisition function.

- Hyperparameter treatment

- Empirical Bayes

- Full Bayesian, via MCMC in pyMC3

Comparison with other software (official)

Comparison with other software (official) Extend this table to all packages in this page

Extend this table to all packages in this page

BayesOpt (Cpp, Python/ MATLAB API)

https://github.com/rmcantin/bayesopt (C++)

- last commit: 7 months ago for docs; 2 years ago for codes.

- docs, very detailed and comprehensive, about both the codes and the theory of BO

- C++ is the most straightforward and complete method to use the library.

- The Matlab/Octave interface is just a wrapper of the C interface.

-

sGaussianProcess,sGaussianProcessML,sGaussianProcessNormal,sStudentTProcessJef,sStudentTProcessNIG- the Student's t distribution is robust to outliers and heavy tails in the data

-

- a full Bayesian approach: computes the full posterior distribution by propagation of the uncertainty of each element and hyperparameter to the posterior. In this case, it can be done by discretization of the hyperparameter space or by using MCMC (not yet implemented) (as of 05/15/2020).

- or an empirical Bayesian approach: computes a point estimate of the hyperparameters based on some score function and use it as a “true” value.

- Selection criteria seems to be the acquisition function

- 似乎多数讨论是用来优化hyperparameter,但这个方向有很多多不同的方法,。

- 整个包很全面,成熟,覆盖C/Python/MATLAB;后续考虑使用;怎么拓展?用C++?

trieste (python, GPFlow 2.x)

https://github.com/secondmind-labs/trieste

- Based on GPFlow 2

- Documents: not very comprehensive, only API descriptions, not many insights

- the **acquisition rule** is the central object of the API, while acquisition functions are supplementary

- because some acquisition rules do not require an acquisition function, such as Thompson sampling

- uses wrappers for GPflow models

- Not sure which models are supported and which training methods are supported. Need to check some detailed tutorial.

-

- Looks heavily rely on tensorflow skills…

- 整体来看还在活跃开发中,依赖TF和GPflow,猜测Debug会比较困难;不适合我现在使用,可以保持关注。

BoTorch (Python, PyTorch) (Facebook)

https://botorch.org/ (Python, PyTorch)

- BoTorch: A Framework for Efficient Monte-Carlo Bayesian Optimization

- support for state-of-the art probabilistic models in GPyTorch, a library for efficient and scalable GPs implemented in PyTorch (and to which the BoTorch authors have significantly contributed).

- multi-task GPs, deep kernel learning, deep GPs, and approximate inference. This enables using GP models for problems that have traditionally not been amenable to Bayesian Optimization techniques.

- Bridging the Gap Between Research and Production (对我现在的研究反而可能是个弊端,代码可能会太重……)

-

- The primary audience for hands-on use of BoTorch are researchers and sophisticated practitioners in Bayesian Optimization and AI.

- We recommend using BoTorch as a low-level API for implementing new algorithms for Ax.

- When not to use Ax: If you're working in a non-standard setting, such as those with high-dimensional or structured feature or design spaces, or where the model fitting process requires interactive work, then using Ax may not be the best solution for you.

-

-

GPyTorchModelprovides a base class for conveniently wrapping GPyTorch models.

-

- Analytic Acquisition Functions: EI, UCB, PI

- Monte Carlo Acquisition Functions (

这是什么?只在这里见过,其它包是否支持?必须配合MCMC使用吗?)

这是什么?只在这里见过,其它包是否支持?必须配合MCMC使用吗?)- MC-based acquisition functions rely on the reparameterization trick, which transforms some set of ϵ from some base distribution into a target distribution. (see Monte Carlo Samplers)

- harnesses PyTorch's automatic differentiation feature (“autograd”) in order to obtain gradients of acquisition functions. This makes implementing new acquisition functions much less cumbersome, as it does not require to analytically derive gradients.

- Seems the whole package is built up upon the concept of posterior distribution. I've not fully understood this yet.

- 功能似乎极其完善,拓展性强,大公司的产品,版本号太低,迭代太快;先不深入研究,保持关注。

scikit-optimize (python, scikit-learn)

https://github.com/scikit-optimize/scikit-optimize (In my opinion, a really bad name but anyway the package is named skopt. Maybe they intend to do something bigger than just BO.)

-

- This tutorial shows how to use different GP kernels from

scikit-learning.

- minimize (very) expensive and noisy black-box functions.

- It implements several methods for sequential model-based optimization.

- tree-based regression model,

forest_minimize - gradient boosted regression trees,

gbrt_minimize(这是什么?) - BO with GP,

gp_minimizeusesOptimizerunder the hood.- can probabilistically choose

LCB,EI,PIat each iteration skopt.learning.GaussianProcessRegressor(The implementation is based on Algorithm 2.1 of Gaussian Processes for Machine Learning (GPML) by Rasmussen and Williams.)-

- base_estimator “GP”, “RF”, “ET”, “GBRT” or sklearn regressor, default: “GP”

ask()andtell()paradigm allows control over detailed steps.

- do not perform gradient-based optimization.

- 基于

scikit-learn的,感觉比较简单可靠,默认GP和其它3种但可以接受其它estimator,可控制优化过程的步骤细节。考虑使用

Emukit (Python, GPy) (Amazon backed?)

https://github.com/EmuKit/emukit

- enriching decision making under uncertainty.

- Multi-fidelity emulation; Bayesian optimisation; Experimental design/Active learning; Sensitivity analysis; Bayesian quadrature

- Docs: Emukit Vision

- Generally speaking, Emukit does not provide modelling capabilities, instead expecting users to bring their own models. Because of the variety of modelling frameworks out there, Emukit does not mandate or make any assumptions about a particular modelling technique or a library.

- Docs: Emukit Structure

- Emukit does not provide functionality to build models as there are already many good modelling frameworks available in python.

- We already provide a wrapper for using a model created with GPy.

- More complex implementations will enable batches of points to be collected so that the user function can be evaluated in parallel.

- Supported acquisition functions for GP:

EntropySearch,MultiInformationSourceEntropySearch,ExpectedImprovement,LocalPenalization,MaxValueEntropySearch,LowerConfidenceBound,ProbabilityOfFeasibility,ProbabilityOfImprovement, etc. 看起来很花哨,而且背靠Amazon,但是总感觉不靠谱,弄不清楚到底是不是BO或者是其它什么更复杂的东西。- 侧重于物理实验和机器学习参数优化,BO是更大框架中的一个部分,既然是基于GPy可以考虑先从GPyOpt入手;暂时不考虑使用

(Deprecated) MOE (python) (Yelp)

https://github.com/Yelp/MOE MOE by Yelp is deployed by various companies in production settings. A downside however is that development stopped in 2017.

Any successor? Yes.

Any successor? Yes.

SigOpt was founded by the creator of the Metric Optimization Engine (MOE) and our research… (See: https://sigopt.com/solution/why-sigopt/)

Why MOE is abandoned? Or, did it become close sourced? See above.

Why MOE is abandoned? Or, did it become close sourced? See above.

(Deprecated) Cornell-MOE (python)

https://github.com/wujian16/Cornell-MOE

- latest commit: 10/23/2019

How does Cornell-MOE relate to the MOE BayesOpt package?

Cornell-MOE is based on the MOE BayesOpt package, developed at Yelp. MOE is extremely fast, but can be difficult to install and use. Cornell-MOE is designed to address these usability issues, focusing on ease of installation. Cornell-MOE also adds algorithmic improvements (e.g., Bayesian treatment of hyperparamters in GP regression, which improves robustness) and support for several new BayesOpt algorithms: an extension of the batch expected improvement (q-EI) to the setting where derivative information is available (d-EI, Wu et al, 2017); and batch knowledge gradient with (d-KG, Wu et al, 2017) and without (q-KG, Wu and Frazier, 2016) derivative information.

(Deprecated) Spearmint (Python 2.7, MongoDB)

- last commit: 04/02/2019

- 已经不怎么维护新功能,有商业化,有出售,背景复杂。暂时不研究

(comment from BayesOpt's docs)

Spearmint (Python): A library based on [Snoek2012]. It is more oriented to cluster computing. It provides time based criteria. Implemented in Python with interface also to Matlab https://github.com/JasperSnoek/spearmint

Becomes Whetlab, then acquired by Twitter, then… disappeared? (source-1 and source-2)

set up a new subtitle if find the eventual successor.

set up a new subtitle if find the eventual successor.

(Deprecated) GPflowOpt (python, TF 1.x)

https://github.com/GPflow/GPflowOpt

Requires GPFlow 1 which depends on 'TF 1.x' and thus its development is stopped.

(Commercial) SigOpt <-- MOE

Should be based on MOE.

Not skimmed yet

pybo (Python)

(comment from BayesOpt's docs)

pybo (Python): A Python package for modular Bayesian optimization. https://github.com/mwhoffman/pybo

DiceOptim (R)

(comment from BayesOpt's docs)

DiceOptim (R): It adds optimization capabilities to DiceKriging. See [Roustant2012] and http://cran.r-project.org/web/packages/DiceOptim/index.html

Limbo (Cpp)

https://github.com/resibots/limbo

Limbo (LIbrary for Model-Based Optimization) is an open-source C++11 library for Gaussian Processes and data-efficient optimization (e.g., Bayesian optimization) that is designed to be both highly flexible and very fast. It can be used as a state-of-the-art optimization library or to experiment with novel algorithms with “plugin” components.

Limbo is partly funded by the ResiBots ERC Project (http://www.resibots.eu).

* develop and study a novel family of such learning algorithms that make it possible for autonomous robots to quickly discover compensatory behaviors.

(comment from BayesOpt's docs)

Limbo (C++11): A lightweight framework for Bayesian and model-based optimisation of black-box functions (C++11). https://github.com/jbmouret/limbo

TS-EMO (MATLAB)

mlrMBO (R)

BO Alternatives

Some other packages not including BO, but something similar.

Simple (Python)

https://github.com/chrisstroemel/Simple

- latest commit: 3 years ago, 01/28/2018

- Simple constructs its surrogate model by breaking up the optimization domain into a set of discrete, local interpolations that are independent from one another.

- Each of these local interpolations takes the form of a simplex, which is the mathematical term for the geometric concept of a triangle, but extended to higher dimensions.

- transforming the global optimization problem into a dynamic programming problem